How Transparent Edge secured a public institution’s website

26 Nov 25

The stability and availability of a website are fundamental pillars for any entity that offers critical online services. For public institutions with high visibility, this requirement is even greater, especially in a context of persistent cyberattacks by hacktivist groups.

This case study addresses the implementation of Transparent Edge’s web cybersecurity platform at a major Spanish public institution subjected to constant denial-of-service attacks. The main objective was to resolve the recurring outages of its portals caused by distributed denial-of-service (DDoS) attacks and stabilize the availability of its websites.

The institution manages critical consultation services that professionals and citizens require for decision-making. Even a brief outage of its websites directly disrupted essential operations.

The underlying technology stack presented challenges inherent to the publishing CMS they use, whose architecture is not designed for high concurrency scenarios, resulting in excessive resource consumption at the origin and exhibiting some fragility in the face of patterns that force access to the backend.

The origin server processed every request, even when the content was static, meaning that relatively modest traffic volumes degraded the service. This quickly resulted in timeouts and service outages, amplifying the impact of any attack.

At the beginning of the onboarding process, the institution experienced an intensification of attacks, both large-scale and application-layer bot attacks, attributed to the activity of the NoName057 group. The systems returned very slow responses as a symptom of origin saturation, and website outages were recurrent.

The challenge was to ensure their websites were available, prevent user concurrency from crashing their portals, repel cyberattacks, and stabilize the experience for legitimate users.

The Transparent Edge team addressed the problem with a progressive defensive architecture deployment.

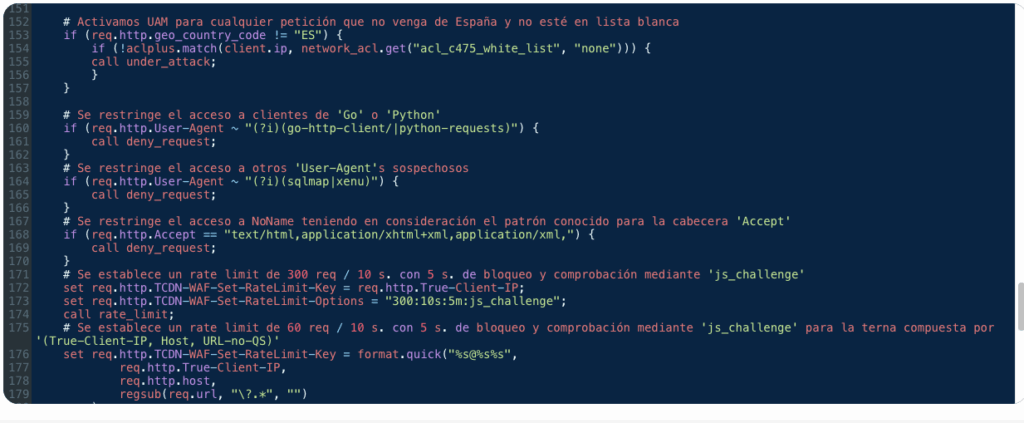

The first step was to activate Under Attack Mode (UAM). This feature combines Perimetrical’s Anti DDoS mitigation with browser-level challenges to quickly filter unwanted traffic.

The anti-DDoS analyzes a wide variety of signals (fingerprints, behavioral patterns, and network reputation) to detect and block sophisticated traffic in real time.

The system adapts instantly to new threats. If the attacker changes their signature, geographic origin, or speed, the detection system reacts accordingly using other behavioral indicators.

The need to reduce requests to the origin was addressed through an optimized caching setup. Caching was configured for static assets (images, stylesheets) and HTML content, resulting in a substantial improvement in hit rate and performance.

This action immediately reduced the consumption of resources at the origin, while by decreasing the pressure on the infrastructure, recurring time-outs disappeared.

To prevent attack vectors based on the composition of friendly permalinks (especially on multilingual sites) and to improve origin performance, redirects were implemented using regular expressions in VCL code, thus moving that part of the logic to the edge.

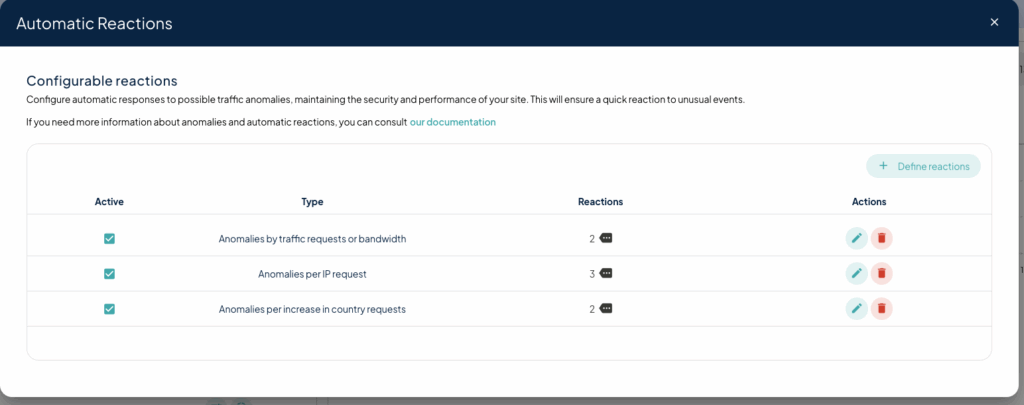

The WAF was initially activated in detection mode and, based on real traffic data, automatic reactions were parameterized for different anomalies.

The strategy involves raising challenges or blocks only when an event exceeds a threshold plausible to a human user.

This setup included the creation of an auto-blacklist to automatically catalog and group IPs identified as causing DDoS attacks.

Examples:

After implementation, the results were quickly apparent. Volumetric attacks stopped taking down websites, and automated traffic was filtered before reaching the backend, without impacting users.

The dashboard analytics revealed a large number of scrapers, URL sequences forcing direct requests to the backend, cross-site scripting (XSS) attempts, and JSON-based SQL injections targeting forms and search functionalities that had previously gone undetected in real time.

An early operational incident occurred when it was discovered that a third-party accessibility service embedded via an was not functioning correctly. Because it originated from outside Spain and executed code, it was initially blocked by geo-policies and UAM. This was resolved by whitelisting the external provider’s IP address.</p>

Under normal operation, any IP address not included in the whitelist that behaves like a bot receives a JavaScript challenge. Specific adjustments were necessary for news agencies and partner organizations that perform legitimate web scraping (often using user agents like Selenium). Whitelists and controlled boundary parameters were established to ensure their activity does not impact availability.

From these iterations, the client was able to clearly visualize what is legitimate traffic, what is noise, and when it is an anomaly that requires mitigation.

The dashboard has ceased to be a reactive panel and has become a tool for monitoring and observation. The technical team operates with reliable, real-time data proactively, rather than reactively.

The integration did not require restructuring existing processes. The operational impact of mitigating DDoS attacks and filtering invalid traffic reduced pressure on the backend, and the adoption of a cache allowed for a reduction in requests to the origin.

With the platform active, the public institution operates with a high hit rate (close to 86%), considering that there are exceptions defined at the client’s request to allow certain traffic flows to reach the origin. Even so, the volume of traffic reaching the infrastructure has been reduced, and its websites no longer appear on lists of sites taken down during cyberattack campaigns.

The Transparent Edge platform filters out noise and continuously performs mitigation, delivering reliable traffic metrics, stable web performance, and content protection.

The client’s IT team uses the dashboard to autonomously configure custom rules and necessary settings. The platform integrates seamlessly into their daily tasks with service logic, and their core business now focuses on defining criteria rather than resolving incidents.

The implementation, which began under operational pressure, has now become a tool for control and resilience. Valid traffic flows, while invalid traffic is neutralized before reaching its origin. IT teams can focus on maintaining critical resources available and stable for the diverse range of users who need them.