How Transparent Edge protected a ticketing site from bot-caused ticket scalping

26 Aug 25

– Do you have any free tickets? Yes? Give them to me! Give them to me! Give them to me!

This is the story of a website selling tickets for a monument complex whose website crashed almost daily because it was indiscriminately attacked between 11:00 PM and 2:00 AM. And it could also be the story of any other site selling scarce goods you know.

We’ll tell you how Transparent Edge intervened to help them regain control of their operations, preventing financial and reputational losses.

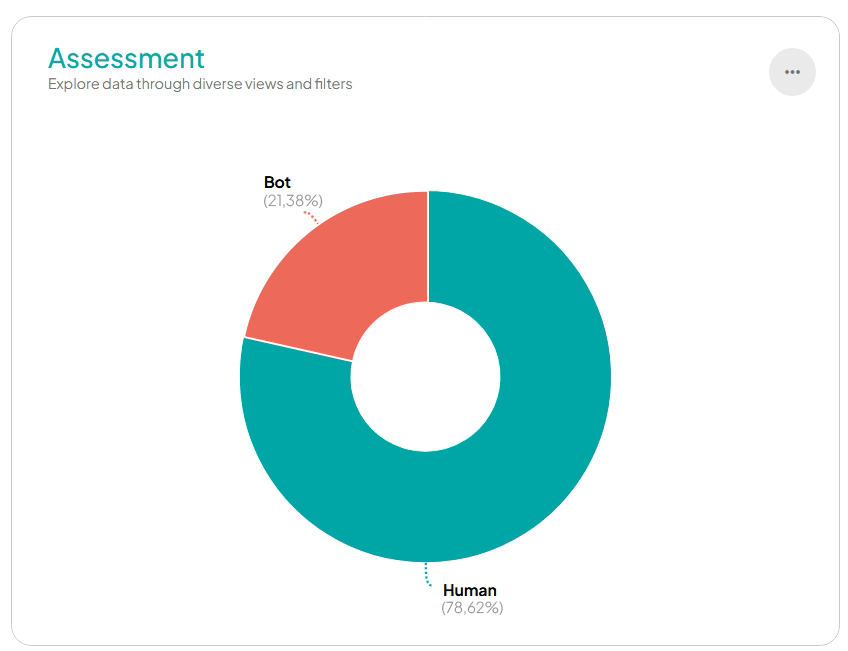

Automated bots purchased tickets and then sold them to travel agencies, which profited by inflating resale prices and harming both users and the company that sold their tickets at a fair price.

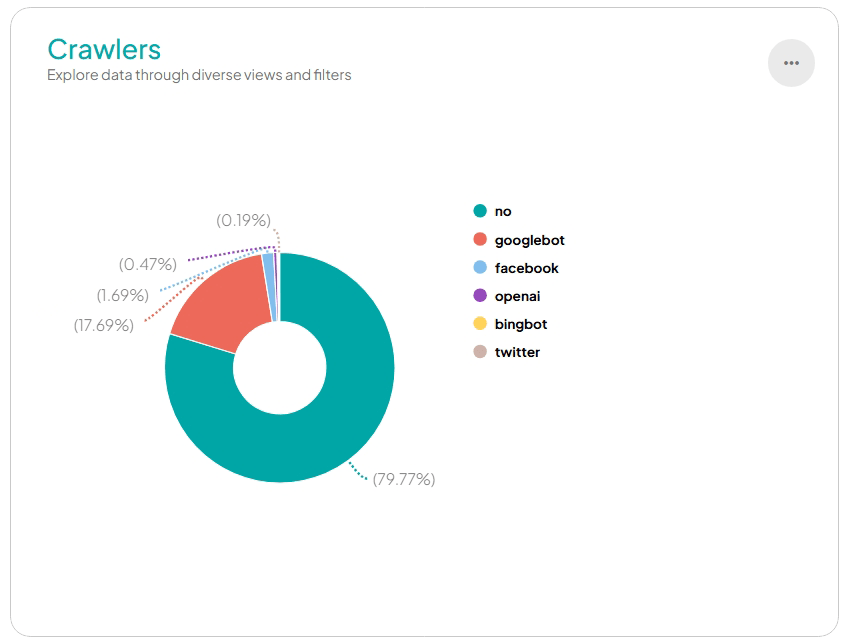

Tirelessly, they scoured the entire site looking for the URL where they could buy, generating excessive traffic along the way, even visiting the least-visited sections, those that were there for SEO or as a legal requirement.

The frantic search for available tickets ended up disrupting the website’s availability, harming genuine users and the business.

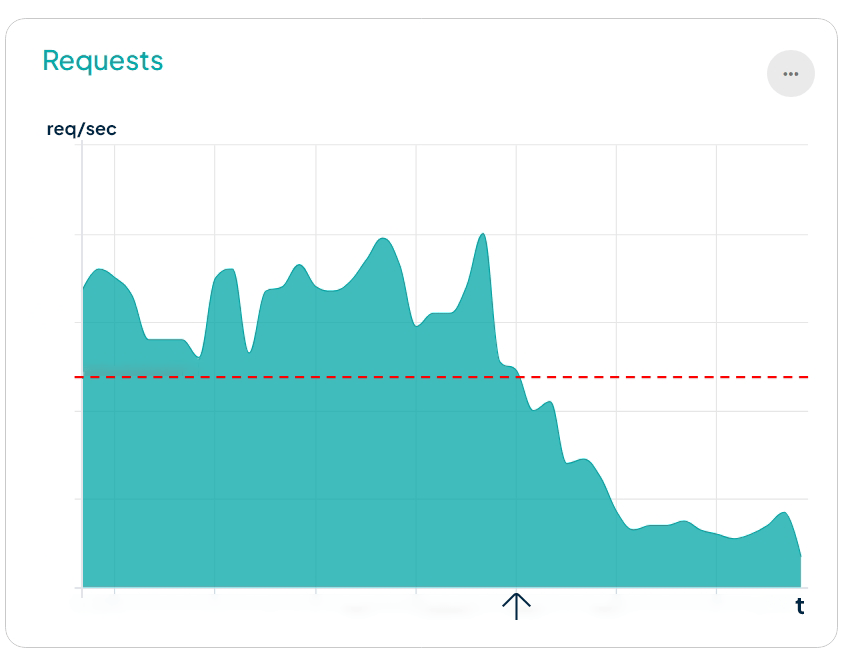

The following infographic shows how the number of requests received (requests/second) compromised the availability of the website, which crashed when exceeding 30 rps.

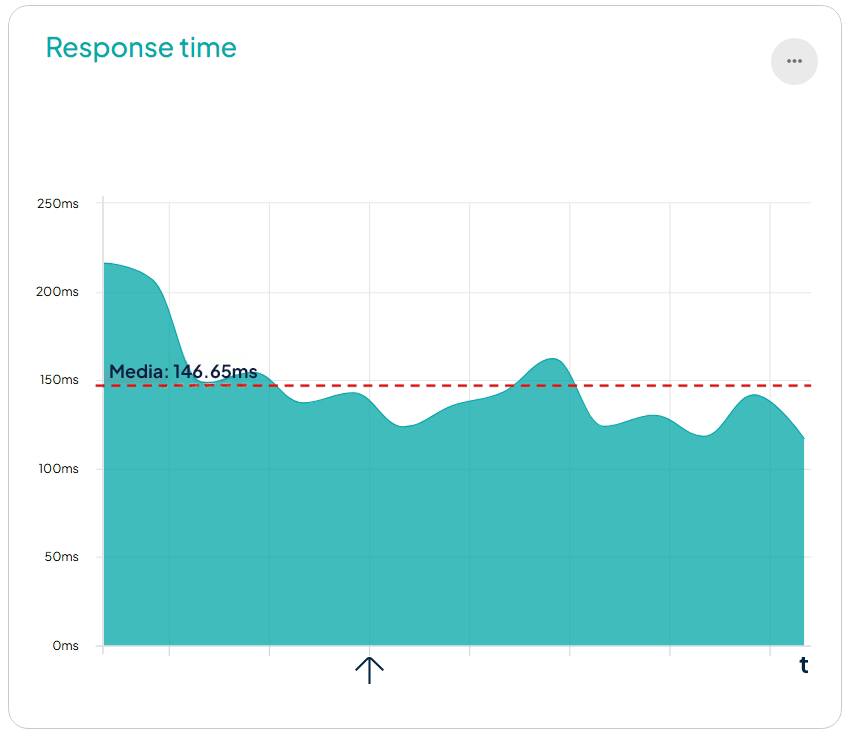

Another parameter that compromised site accessibility was the response time. When receiving excessive requests, response times increased above 2 seconds, and the server returned HTTP 408 timeout codes.

The perfect combination for Transparent Edge to spring into action: a frequently down site, high infrastructure costs due to excessive traffic, and a clear violation of fair use rules.

The OWASP Automated Threat Handbook for Web Applications defines scalping (OAT-005) as a threat designed to “obtain exclusive and/or limited-availability goods/services through unfair methods.“

Such an outrage called for an intervention, and that’s what we did.

The first step was to place the CDN in front of the origin. This way, users connect to the platform and not directly to the website. This measure requires no infrastructure changes and is a quick process with no service interruptions or data loss. From that moment on, only requests from the nodes accessed the server, and their traffic dramatically decreased by more than 50%.

The next step was to activate Anti-DDoS for layers 3, 4, and 7 and set a rate limit. Since it was still unknown where the traffic that was bringing down the site was coming from, a maximum request threshold was activated, so that anything exceeding the threshold would be blocked.

An HTTP 429 status code indicates that an IP address has sent too many requests to a server in a short period of time. This can be caused by IP addresses making abusive requests, or excessive requests in a very short period of time. In response, Under Attack Mode is activated, which blocks access to those requests and implements rate limiting to mitigate the impact of the attack, preventing this excessive raw traffic from saturating resources and bringing down the server.

They were receiving frequent and indiscriminate visits from some IPs, so the next step was to set a low scoring threshold so that low reputation IPs or those coming from an ASN with a high score could be blocked.

If an IP receives more than a certain number of requests per second, it may be penalized for a period of time, for example 12 hours, and points may also be added (especially if it is a repeat offense).

This parameter is customized for the site type and situation. In this case, and due to the frequency and volume of scalping they received, they decided to set restrictive parameters, which could later be modified to make them more permissive.

Using automated navigation mechanisms, bots try to capture free tickets. They use engines like Selenium or similar, a set of open-source software tools designed to automate web browsers. This allows them to bombard the website with requests for tickets.

Once they found the ticket sales URL, they continued the purchasing process as if they were a conventional human user, except they completed the entire process in a fraction of the time it takes a real person.

Bots don’t just steal a few listings; they’re capable of collecting the entire inventory because ordinary shoppers can never outpace them. This generates user complaints in the short term and damages website trust in the medium term.

The most sophisticated scalper bot operators shave extra milliseconds off the acquisition process by balancing and distributing their servers geographically to exploit latency in data signals.

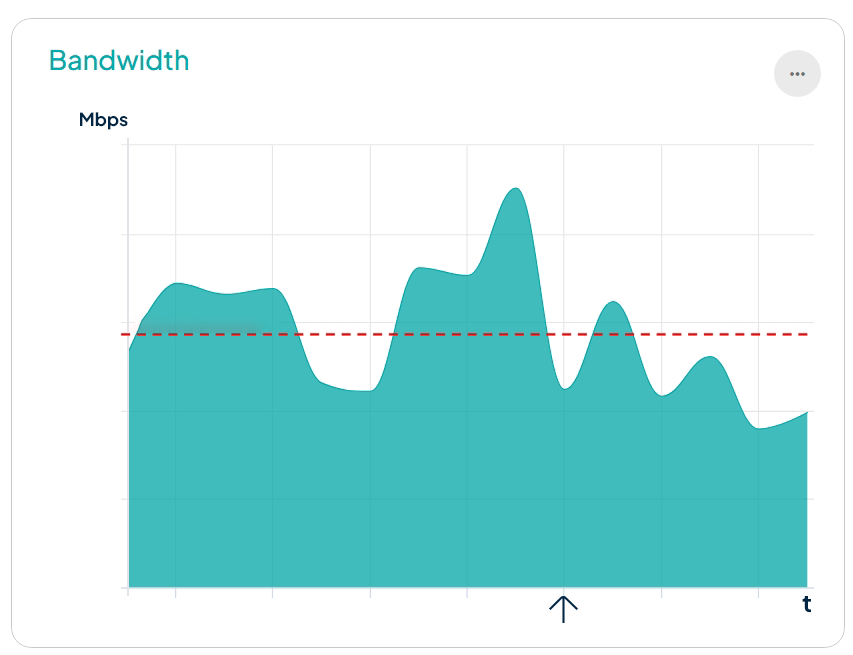

Meanwhile, bots consume significant bandwidth and hamper website speed and performance. All of this results in increased infrastructure costs and the need for the IT team to constantly put out fires.

By placing JS challenges, we prevent automated traffic from accessing the site, effectively restricting ticket sales to human users, who are the only ones who can access the purchasing process.

Another possible layer of protection against automated traffic relates to the geographic location of requests. The Transparent Edge team defined that for IP addresses in certain regions, a JavaScript challenge would automatically be presented. This test is imperceptible to the legitimate user, yet it hinders the bot.

Bots sufficiently developed to act as browsers or exhibit human-like behavior can eventually bypass the Anti-DDoS, which is why additional layers of protection are needed. In this case, a threshold was defined beyond which the user’s browsing is considered an anomaly, and an automated response is triggered. For example, if it exceeds 100 requests per minute, it is no longer a person buying tickets, but a crawler, and the system reacts by blocking its access for 24 hours.

| Type of anomaly | Reaction |

|---|---|

| By IP request | Block IP for 12 hours |

| By traffic request | Activate UAM for 4 hours |

| By bandwidth | Activate UAM for 4 hours |

| Increase in country request | Activate UAM for 4 hours |

| IP crawler | Block IP for 24 hours |

A WAF can operate in detection mode or active mode. At different times, we may need one mode or the other.

Since some better-programmed bots can execute their commands more slowly to simulate human behavior, Transparent Edge technicians opted to add additional layers of protection and generate regular expressions that validate the parameters passed to the purchase URLs. This ensured that the variables passed to the backend conform to the structure and typology they should have, and we ruled out any random tests that bots might perform, rejecting their access attempts.

It’s natural for certain IP addresses to make frequent purchases, so we add those trusted IP addresses to a whitelist and grant them less restrictive access permissions. This doesn’t mean they have a free pass, but rather that their limits for being classified as an anomaly are more relaxed.

Once the basic observability and defense controls model was established, the team was able to move into fine-grained, granular tuning using the versatility offered by the Varnish Configuration Language (VCL).

An example of this is issuing a deny_request to a Python or Go script or blocking scripts with NoName(057) headers.

EXAMPLE CODE:

#Restricting access to ‘Go’ or ‘Python’ clients

if (req.http.User-Agent ~ "(?i)(go-http-client/|python-requests)") {

call deny_request;

}

# Restrict access to NoName(057) taking into account the known pattern for the ‘Accept’ header

if (req.http.Accept == "text/html,application/xhtml+xml,application/xml,") {

call deny_request;

}

Website traffic has decreased by 60%. Their site has been fully operational for over a year, with zero downtime. They’ve stopped receiving phone and social media complaints about the inability to purchase tickets. Legitimate customers can purchase tickets without any problems. And they send a box of Manolitos to our tech team every month because their IT team is happy.

Do you want a calm IT team dedicated to development projects, satisfied human customers, and a website that runs fast and secure 24/7? Call us! We’ll teach you how to deploy the best protection configuration for your websites and API. With cross-layers of security, detection, and mitigation. We support you with the experience of our engineers by implementing optimizations customized to your needs.

And if your website or web application isn’t a ticketing site but is under attack from another type of attack, you can also call us. See how you can take control of your environment and enjoy your work.

#secureYourSite